Training an Image Classifier on MNIST

Overview

Sample below is part of TensorFlow 2 quick start. It downloads MNIST data set images along with labels. These images are in Numpy format. Then data is batched and reshuffled. Keras model is built using subclassing API. Layers are convolution -> flattener -> dense-NN 1 128 outputs -> dense-NN 2 10 outputs .

Optimizer is Adam (typical stochastic gradient descent based on adaptive estimate of 1st and 2nd order stochastic moments). This optimizer is efficient for large data / parameter count problems (as is the case with images).

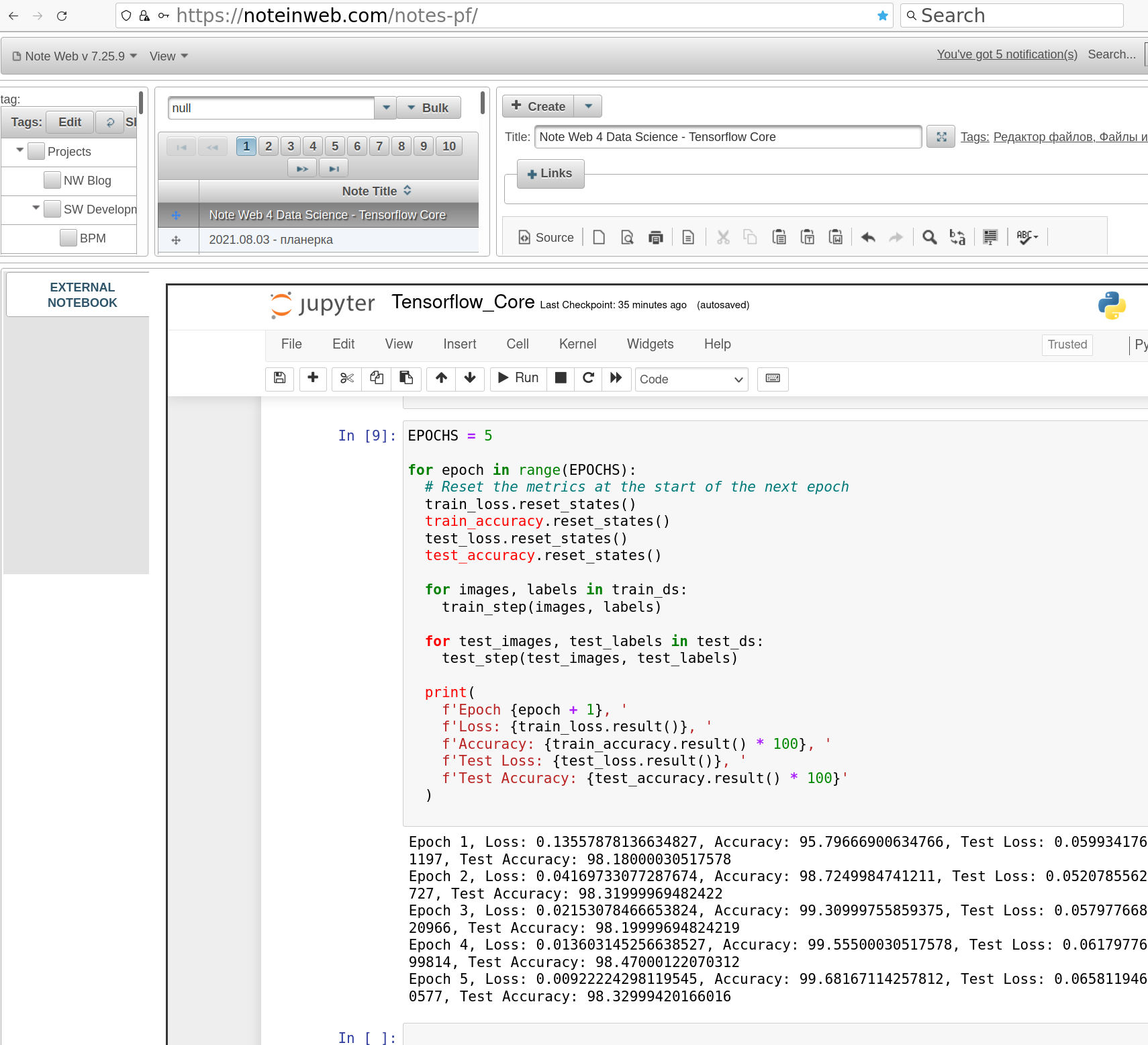

Then model is trained using tf.GradientTape and tested with "test_steps" function. Model improvement is done in 5 epochs.

Implementation

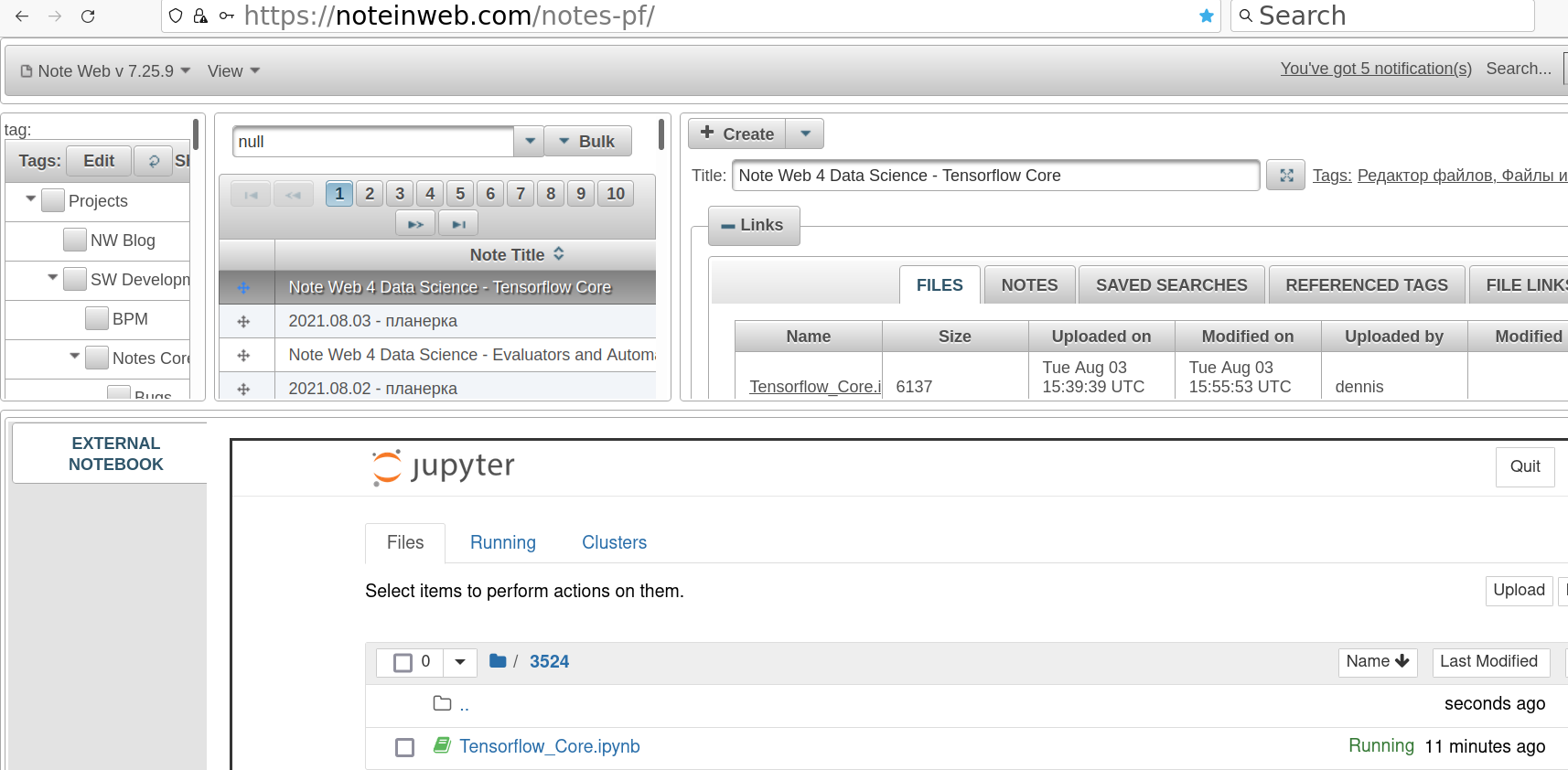

Jupyter notebook attached to this article contains the following code implementing described test data loading and model training:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten, Conv2D

from tensorflow.keras import Model

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

# Add a channels dimension

x_train = x_train[..., tf.newaxis].astype("float32")

x_test = x_test[..., tf.newaxis].astype("float32")

train_ds = tf.data.Dataset.from_tensor_slices(

(x_train, y_train)).shuffle(10000).batch(32)

test_ds = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

class MyModel(Model):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = Conv2D(32, 3, activation='relu')

self.flatten = Flatten()

self.d1 = Dense(128, activation='relu')

self.d2 = Dense(10)

def call(self, x):

x = self.conv1(x)

x = self.flatten(x)

x = self.d1(x)

return self.d2(x)

# Create an instance of the model

model = MyModel()

loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

optimizer = tf.keras.optimizers.Adam()

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')

test_loss = tf.keras.metrics.Mean(name='test_loss')

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='test_accuracy')

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

# training=True is only needed if there are layers with different

# behavior during training versus inference (e.g. Dropout).

predictions = model(images, training=True)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, predictions)

@tf.function

def test_step(images, labels):

# training=False is only needed if there are layers with different

# behavior during training versus inference (e.g. Dropout).

predictions = model(images, training=False)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

EPOCHS = 5

for epoch in range(EPOCHS):

# Reset the metrics at the start of the next epoch

train_loss.reset_states()

train_accuracy.reset_states()

test_loss.reset_states()

test_accuracy.reset_states()

for images, labels in train_ds:

train_step(images, labels)

for test_images, test_labels in test_ds:

test_step(test_images, test_labels)

print(

f'Epoch {epoch + 1}, '

f'Loss: {train_loss.result()}, '

f'Accuracy: {train_accuracy.result() * 100}, '

f'Test Loss: {test_loss.result()}, '

f'Test Accuracy: {test_accuracy.result() * 100}'

Conclusion

Feeding a few arbitrary images of matching dimensions in Numpy format to the trained model gives an impressioin of 98.3% accurracy of classification.

References

- https://www.tensorflow.org/tutorials/quickstart/advanced

- TensorFlow Machine Learning Projects by Ankit Jain

ISBN 978-1-78913-221-2

Note In Web, Inc. © September 2022-2026; Denys Havrylov Ⓒ 2018-August 2022